Real-time digital data streaming from smartphone apps offer us the opportunity for radically new understandings of human behavior – but also requires that we break away from outdated models of data collection and analysis in order to take full advantage of the richness of the information available.

This strategy is most effectively approached via transdisciplinary alliances that can afford new perspectives on work we’ve become too close to. This is what my colleagues at the USC mHealth Collaboratory have been working on. The Collaboratory includes psychologists and sociologists, nurses and doctors, economists and political scientists, film-makers and artists, engineers and computer scientists, social workers and small business owners, poets and writers, just to name a few. Collaborating with colleagues across diverse fields can facilitate new ways of defining variables, and analyzing analyzing the data that new technologies and the Internet of Things gives us, that allow for jumps in understanding that do more than improve on old models—they create entirely new ones. These new computational models of human behavior, ultimately, put us on the road to creating innovative software such as a sophisticated, automated, digital “doctor in your pocket”, administering highly personalized JITAIs (Just-In-Time Adaptive Interventions) that can help shift behavior and maintain behavior change much more effectively than current approaches (see here, here and here).

An example of the way that this kind of thinking can advance research practices is around the idea of “engagement.” For instance, we used to have people play a game on a phone or tablet and then give them questionnaires that asked directly “How did you like the game?” Until, I imagine, a game designer or computer scientist thought to themselves: “I’ve got all this data: How long did you stay on the page? How many times did you click? And we had not been fully utilizing all that data . We’re now going to call that engagement.”

This example reveals the limitations of traditional assumptions around data collection and develops an old variable, engagement, into a new, dynamic model. Dynamic, because it can be measured and manipulated in real time. To be truly effective with these data streams, we have to understand that there are variables we don’t have words or concepts for yet. Ideally we should be able to look at a large database of real-time data and be able to extract variables we wouldn’t have known to look for previously.

Right now we’re very hobbled by our own vocabulary, but also by the very siloed disciplines. One of the big things that the USC mHealth Collaboratory is trying to do is to create an atmosphere that fosters transdisciplinary research that will expand our horizons in all kinds of ways.

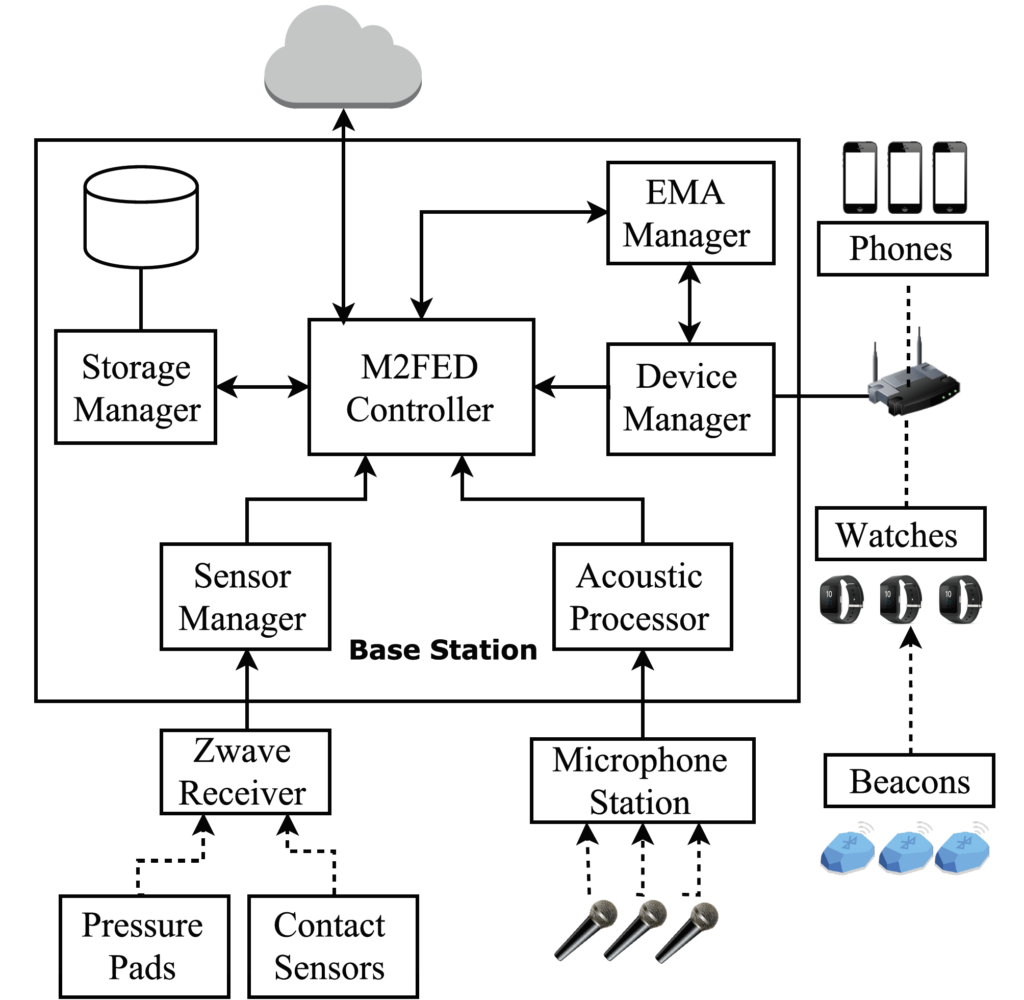

For instance, right now I’m working on a project called Monitoring and Modeling Family Eating Dynamics (M2FED) with engineers and computer scientists at the University of Virginia and behavioral and social scientists at USC. We are building a Cyberphysical System to understand family eating dynamics in the home. A Cyberphysical System (CPS) is a network of interacting elements such as hardware (smartwatches, for instance) and software (apps, for instance). We wanted the CPS to be able to identify various emotions, including stress. Stress turned out to be a really hard one for the computer scientists and engineers to pin down using voice traces and algorithms. What they ultimately developed, with all collaborators internalizing a bit of each other’s expertise as we learned to speak each other’s languages, was a really interesting, ingenious, useful approach to the problem.

This creative solution counters misconceptions many of us have about disciplines sticking to their ‘areas of expertise’. Working this way definitely requires some humility and some flexibility and creativity that isn’t always natural, but it’s can lead to places you’d never get otherwise on your own.

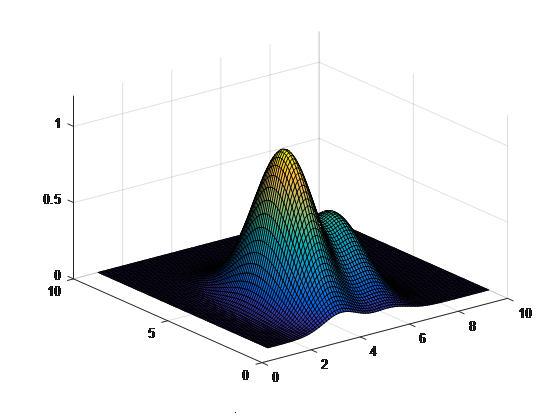

But then once you have the data, there’s also the question of analysis. For instance, we might be getting continuous accelerometry data, and instead of merely averaging that out to something like steps per day, or minutes spent in vigorous physical activity per hour, we would be looking for patterns that might give us usable indices. There’s all of this fine-grained material on behavior loops, on how past behavior influences future behavior, on how one person’s behavior influences another person’s behavior depending on timing, context, relationship and so many other (perhaps yet unknown) variables, but we really don’t know yet how to extract that kind wisdom from the data. We’re still stuck on the lagged models that we’ve been used for years– did what happened 10 minutes ago influence what happened 10 minutes later? As opposed to looking at multidimensional space as a blanket, with tons of different variables peeking out at us from underneath it, and whose shape shifts over time depending upon the values of each of the variables in relationship to one another.

So how do we move all of this forward? For me, it’s about moving from what I call cold models to hot models. A cold model is something static and basic, not at all personalized – like most of our current theories of behavior. A warm model uses personalized data that’s coming in – and we still have a lot of work ahead discovering how best to model it – but the idea is that we should slowly be able to warm these models up until they’re more personalized to you. Because in the end, what we want to be able to do is provide the intervention that a person needs at the moment they need it.

The USC mHealth Collaboratory, which is supported in part by the USC Collaboration Fund, is working to forge transdisciplinary alliances with scientists and artists across disciplines and institutions. Our members and their collaborators are spearheading the development of new, dynamic and highly contextualized models of health-related behaviors as they unfold in real time.

You must be logged in to post a comment.