Editor’s Note: This analysis is part of the USC-Brookings Schaeffer Initiative for Health Policy, which is a partnership between Economic Studies at Brookings and the USC Schaeffer Center for Health Policy & Economics. The Initiative aims to inform the national healthcare debate with rigorous, evidence-based analysis leading to practical recommendations using the collaborative strengths of USC and Brookings.

Vinod Khosla, a legendary Silicon Valley investor, argues that robots will replace doctors by 2035. And there is some evidence that he may be right.

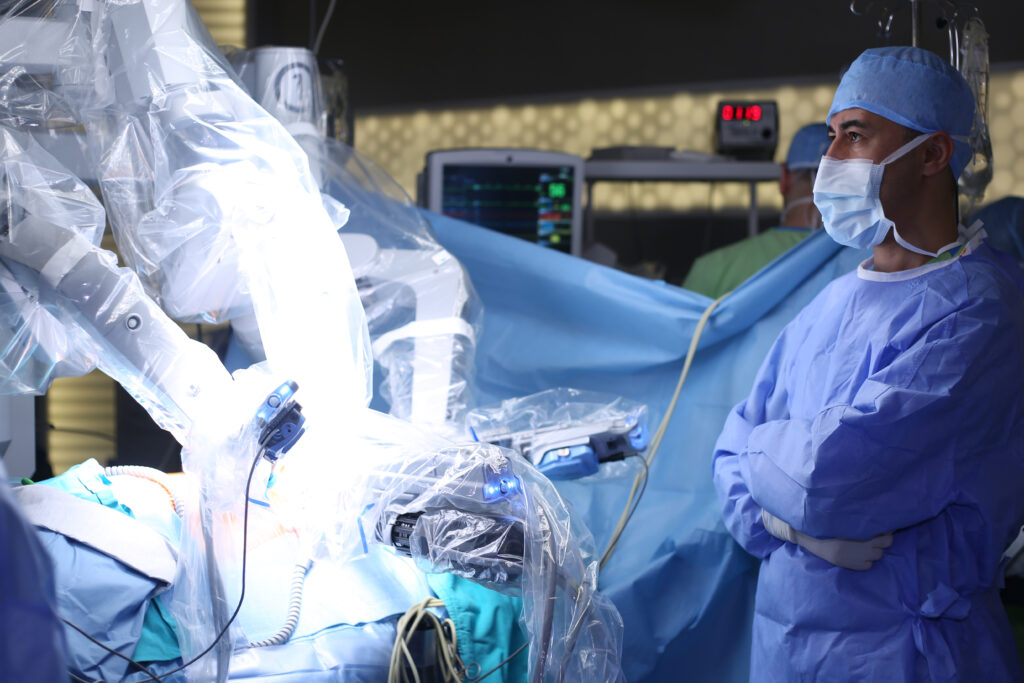

A 2017 study out of the Massachusetts General Hospital and MIT showed that an artificial intelligence (AI) system was equal or better than radiologists at reading mammograms for high risk cancer lesions needing surgery. A year earlier, and reported by the Journal of the American Medical Association, Google showed that computers are similar to ophthalmologists at examining retinal images of diabetics. And recently, computer-controlled robots performed intestinal surgery successfully on a pig. While the robot took longer than a human, its sutures were much better—more precise and uniform with fewer chances for breakage, leakage, and infection. Tech boosters believe that AI will lead to more evidence-based care, more personalized care, and fewer errors.

Of course, improving diagnostic and therapeutic outcomes are laudable goals. But AI is only as good as the humans programming it and the system in which it operates. If we are not careful, AI could not make healthcare better, but instead unintentionally exacerbate many of the worst aspects of our current healthcare system.

Using deep and machine learning, AI systems analyze enormous amounts of data to make predictions and recommend interventions. Advances in computing power have enabled the creation and cost-effective analysis of large datasets of payer claims, electronic health record data, medical images, genetic data, laboratory data, prescription data, clinical emails, and patient demographic information to power AI models.

AI is 100 percent dependent on this data, and as with everything in computing, “garbage in, garbage out,” as the saying goes. A major concern about all our healthcare datasets is that they perfectly record a history of unjustified and unjust disparities in access, treatments, and outcomes across the United States.

According to a 2017 report by the National Academy of Medicine on healthcare disparities , non-whites continue to experience worse outcomes for infant mortality, obesity, heart disease, cancer, stroke, HIV/AIDS, and overall mortality. Shockingly, Alaskan Natives suffer from 60 percent higher infant mortality than whites. And worse, AIDS mortality for African Americans is actually increasing. Even among whites, there are substantial geographic differences in outcomes and mortality. Biases based on socioeconomic status may be exacerbated by incorporating patient generated data from expensive sensors, phones, and social media.

The data we are using to train our AI models could lead to results that perpetuate—and even exacerbate—rather than remedy these stubborn disparities. The machines do not and cannot verify the accuracy of the underlying data they are given. Rather, they assume the data are perfectly accurate, reflect high quality, and are representative of optimal care and outcomes. Hence, the models generated will be optimized to approximate the outcomes that we are generating today. It is even harder to address AI-generated disparities because the models are largely “black boxes” devised by the machines and inexplicable, and far harder to audit than our current human healthcare delivery processes.

Another major challenge is that many clinicians make assumptions and care choices that are not neatly documented as structured data. Experienced clinicians develop intuition that allows them to identify a sick patient even though he or she might look identical to another less ill patient by the numbers being fed into computer programs. This results in some patients being treated differently than others for reasons that will be hard to distill from the electronic health record data. This clinical judgment is not well represented by data.

These challenges loom large when health systems try to use AI. For example, when the University of Pittsburgh Medical Center (UPMC) evaluated the risk of death from pneumonia of patients arriving in their emergency department, the AI model predicted that mortality dropped when patients were over 100 years of age or had a diagnosis of asthma. While the AI model correctly analyzed the underlying data—UPMC did indeed have very low mortality for these two groups. It was incorrect to conclude that they were lower risk. Rather, their risk was so high that the emergency department staff gave these patients antibiotics before they were even registered into the electronic medical record, so the time stamps for the lifesaving antibiotics were inaccurate. Without understanding clinician assumptions and their impact on data—in this case accurate timing of antibiotic administration—this kind of analysis could lead to AI-inspired protocols that harm high-risk patients. And this is not an isolated example; clinicians make hundreds of assumptions like these every day, across numerous conditions.

Before we entrust our care to AI systems and “doctor robots”, we must first commit to identifying bias in datasets and fixing them as much as possible. Furthermore, AI systems need to be evaluated not just on the accuracy of their recommendations, but also on whether they perpetuate or mitigate disparities in care and outcomes. One approach could be to create national test datasets with and without known biases to understand how adeptly models are tuned to avoid unethical care and nonsensical clinical recommendations. We could go one step further and leverage peer review to evaluate findings and make suggestions for improving the AI systems. This is similar to the highly effective approach used by the National Institutes of Health for evaluating grant applications and by journals for evaluating research findings. These interventions could go a long way towards improving public trust in AI and perhaps, someday, enabling a patient to receive the kind of unbiased care that human doctors should have been providing all along.

Dr. Kocher is a senior fellow at the USC Schaeffer Center for Health Policy & Economics, adjunct professor of medicine at Stanford, and partner at Venrock, a venture-capital firm.

Dr. Emanuel is vice provost and chair of the Department of Medical Ethics and Health Policy at the University of Pennsylvania, venture partner at Oak HC/FT, and author most recently of Prescription for the Future: The Twelve Transformational Practices of Highly Effective Medical Organizations (2017).